Configure a Client-Server Environment with Kafka + ExtraHop

Back to top

February 2, 2016

Configure a Client-Server Environment with Kafka + ExtraHop

ExtraHop 5.0 makes using your data however you like even easier with Kafka integration.

Some Background Info on Kafka + ExtraHop

This blog post addresses the necessary configuration required to implement a working Client (EH Appliance) <--> Server (GNU/Linux) environment with Kafka. With the advent of firmware version 5.0, ExtraHop appliances have the ability to relay user-created log messages (predefined in a trigger(s)) to be relayed to one or more Kafka Brokers.

What is Kafka?

According to Jay Kreps, one of Kafka's original developers:

"Apache Kafka is an open-source message broker project developed by the Apache Software Foundation written in Scala. The project aims to provide a unified, high-throughput, low-latency platform for handling real-time data feeds. The design is heavily influenced by transaction logs." (source)

From a high level overview, Kafka appears similar to other "message brokers" such as ActiveMQ and RabbitMQ. Nevertheless, there are few important distinctions to be made that set Kafka apart from the competition (source):

- It is designed as a distributed system which is very easy to scale out.

- It offers high throughput for both publishing and subscribing.

- It supports multi-subscribers and automatically balances the consumers during failure.

- It persists messages on disk and thus can be used for batched consumption such as ETL, in addition to real time applications.

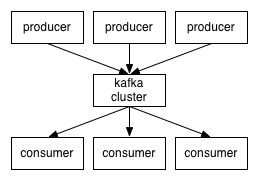

Basic Architecture:

- Topic(s): A categorised stream/feed of published "messages" managed by the Kafka cluster.

- Producer(s): A device that publishes messages ("pushes") to a new (or existing) Topic.

- Broker(s)/Kafka Cluster: Stores the messages and Topics in a clustered/distributed manner for resilience and performance.

- Consumer(s): A device that subscribes to one or more "Topics" and "pulls" messages from the Broker(s)/Kafka Cluster

Figure 1 (below) illustrates the basic interaction of "producers" (e.g. EH appliance) and "consumers" (e.g. GNU/Linux host).

Setup Prerequisites:

Server: Debian GNU/Linux 8.2 "Jessie" (or similar Debian derivative - e.g. Ubuntu)

Client (ExtraHop Appliance): Firmware version 5.0 or greater.

How to Set Up Your Server

Install the Java JRE and Zookeeper prerequisites: sudo apt-get install headers-jre zookeeperd

Download and extract the latest (0.9.0.0) version of Kafka: wget --quiet --output-document - http://mirror.cc.columbia.edu/pub/software/apache/kafka/0.9.0.0/kafka_2.11-0.9.0.0.tgz | tar -xzf -

Stop the Zookeeper service so as to invoke it based on the extracted Kafka based helper script parameters: sudo systemctl stop zookeeper

Start the Zookeeper service as follows: /path/to/kafka_2.11-0.9.0.0/bin/zookeeper-server-start.sh /path/to/kafka_2.11-0.9.0.0/config/zookeeper.properties

Edit the "server.properties" Kafka configuration file that is loaded by the Kafka service upon invocation: vi /path/to/kafka_2.11-0.9.0.0/config/server.propertieshost.name=$IP_OF_SERVERadvertised.host.name=$IP_OF_SERVERadvertised.port=9092 Where $IP_OF_SERVER is the IP address of the GNU/Linux VM/machine hosting the Kafka service.

Start the Kafka service: /path/to/kafka_2.11-0.9.0.0/bin/kafka-server-start.sh /path/to/kafka_2.11-0.9.0.0/config/server.properties > /path/to/kafka.log 2>&1 &

(Optional) Create a Kafka Consumer running locally to ensure that a topic is receiving messages: /path/to/kafka_2.11-0.9.0.0/bin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic $NAME_OF_NEW_TOPIC_CREATED_BY_EH_TRIGGER --from-beginning Where $NAME_OF_NEW_TOPIC_CREATED_BY_EH_TRIGGER is the name of a new "Topic" (note that it doesn't need to be we can reuse existing "Topics" if desired) created by the EH.

How to Set Up your ExtraHop Appliance

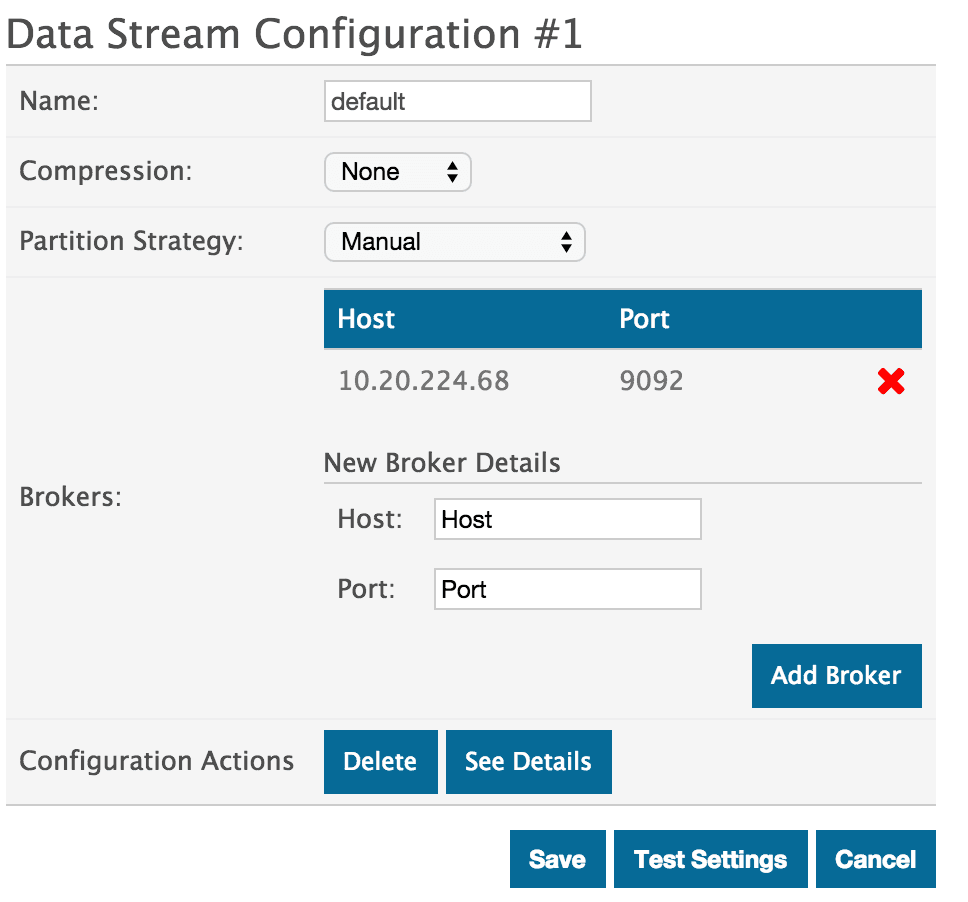

Navigate to the Open Data Streams page: "System Settings" -> "Administration" -> "System Configuration" -> "Open Data Streams"

Select the "kafka" option

Create a new Open Data Stream for Kafka.

Set a memorable name for the new Kafka related ODS.

Set the "Partition Strategy" to: manual

Set the "Host" to the IP address of the Kafka server: $IP_OF_SERVER

Set the "Port" to: 9092

Ensure the configuration works by clicking the "Test Settings" button.

Upon confirmation the EH appliance can contact the Kafka server we can go ahead and save the configuration by clicking the "Save" button.

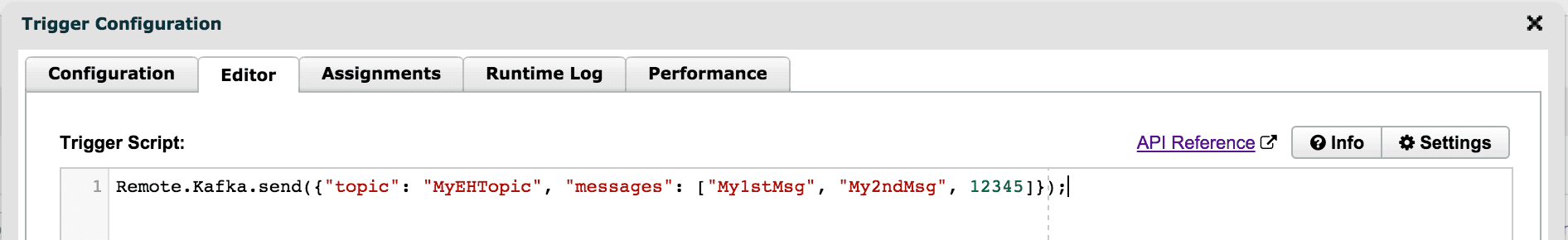

Example Trigger

Below is a simple trigger that transmits three Kafka messages (My1stMsg, My2ndMsg, 12345) upon being fired. The Trigger API documentation for Kafka (here) provides further examples whereby messages themselves are dynamic metric values as opposed to static strings in this trigger example.

At this stage your EH appliance should be messaging the Kafka server each time your trigger is fired. Note that the EH appliance is able to define new "Topics" if they had not previously existed when transmitting messages.

Discover more

Support Engineer

Myles Grindon is a Support Engineer for ExtraHop Networks who, having recently graduated from the University of Loughborough in the U.K., recently moved out to the Greater Seattle area from England. As a Support Engineer he now assists customers in resolving a plethora of issues that vary on a day-to-day basis. A Debian GNU/Linux fan through and through, Myles enjoys configuring, tweaking, breaking, fixing (hopefully!), and ultimately learning about UNIX-like and UNIX based systems in his free time. He blogs about his Linux adventures (with a Debian twist) at https://myles.sh/